LPP SOFT

Autonomous navigation

- High-level understanding of the environment, UGV localization determination, and path planning for reliable operation.

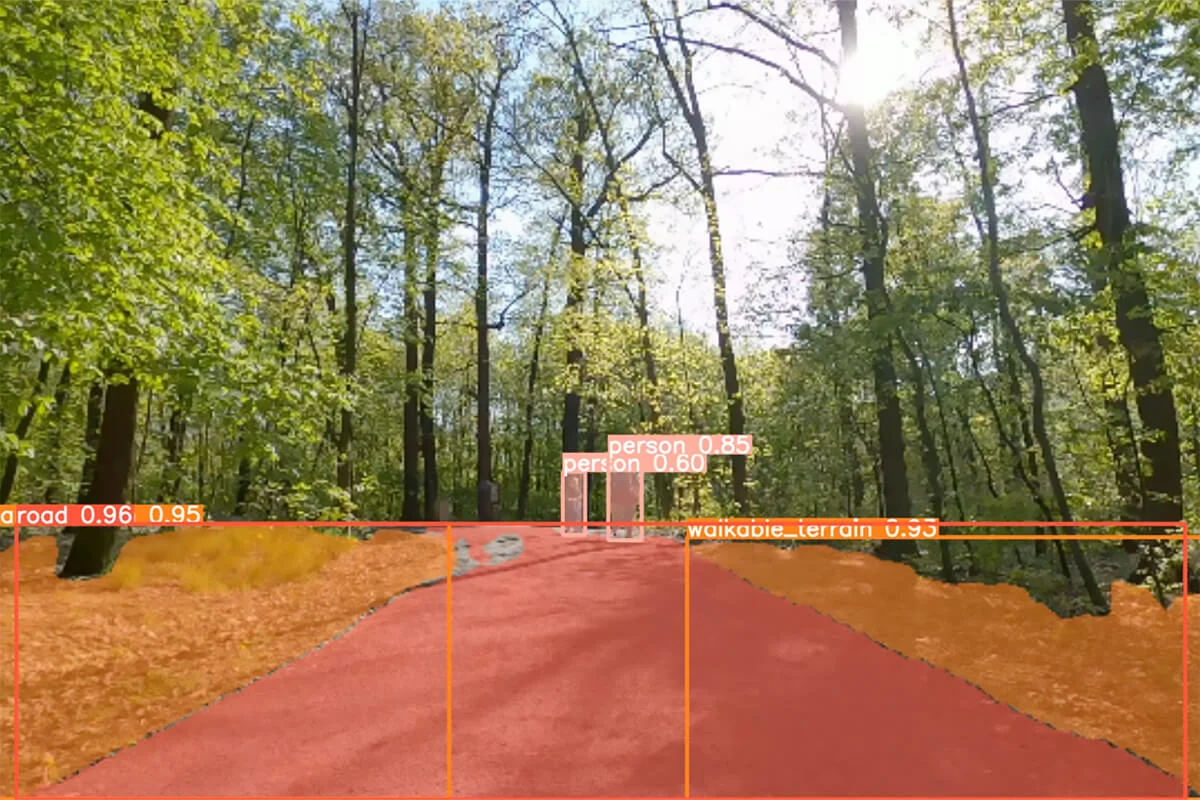

- AI machine learning based object recognition to navigate UGV through unknown terrain with risk assessment.

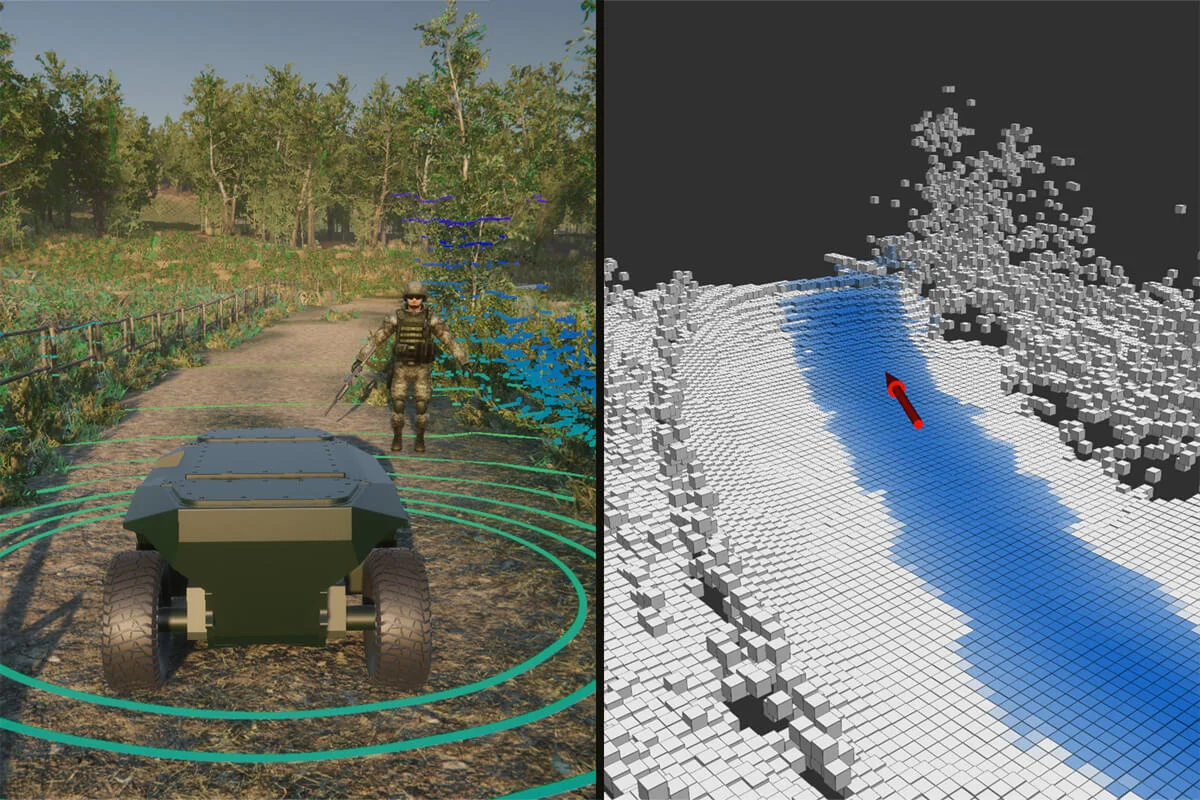

- Virtual environment simulation with GPS-IMU-LiDAR-Camera simulated sensors for testing navigation in digital twin environments.

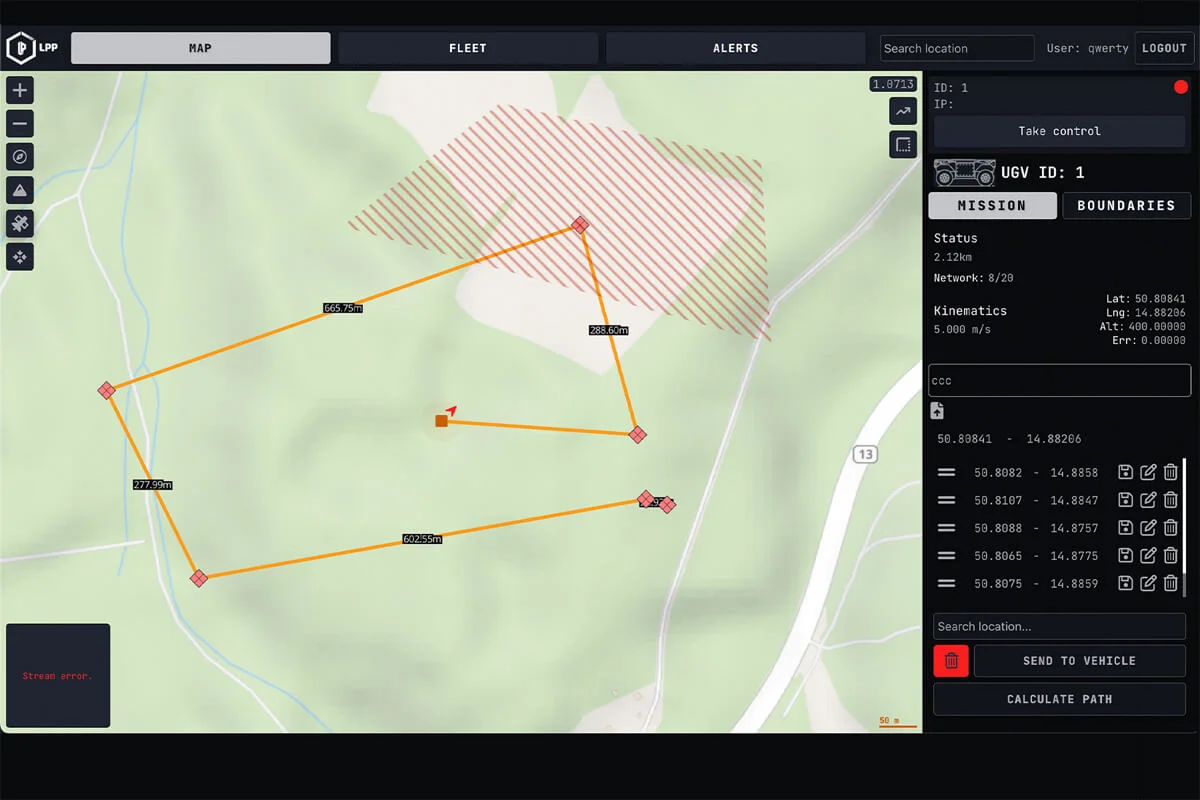

- Global Planner Application for camera streaming, real-time vehicle diagnostics, and mission planning.

- Ready for implementation to any unmanned system and vehicle with the equipped sensors.

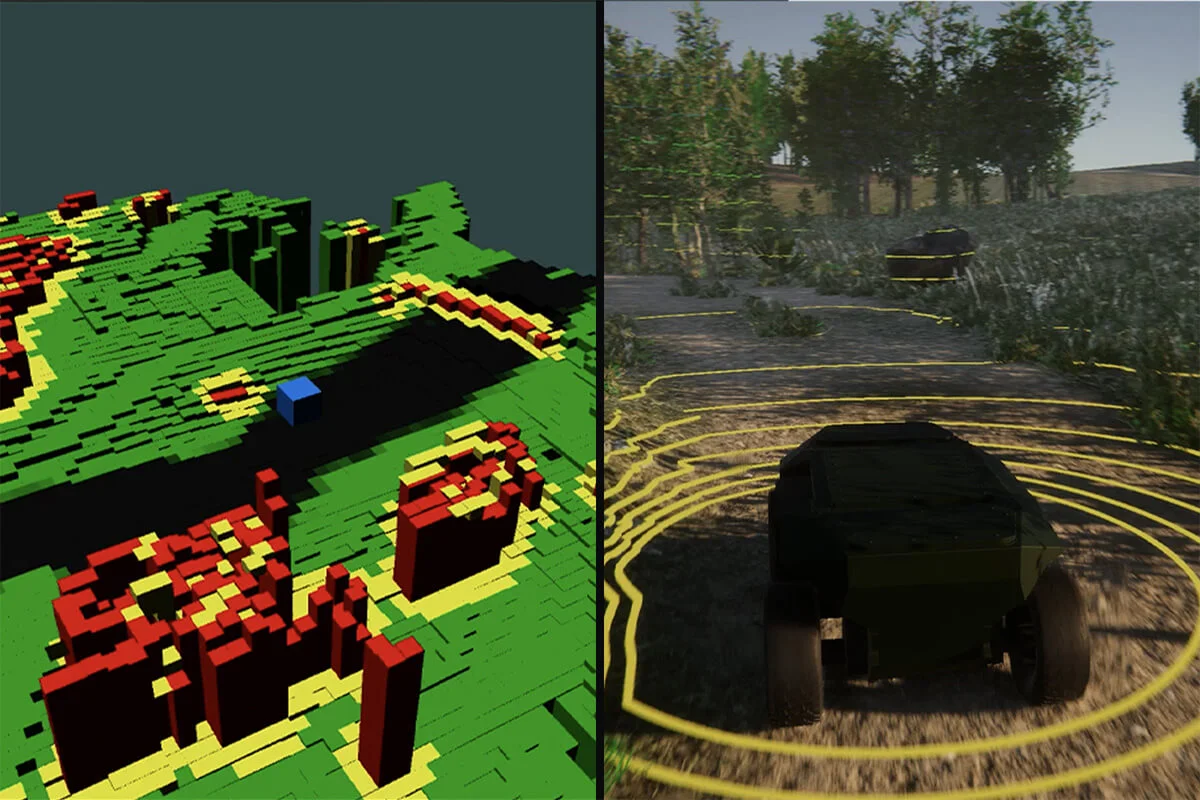

The system uses IMU, GPS, wheel encoders, and a magnetic compass, to determine the UGV‘s position and orientation. All the information gathered is used to create a map of the environment for path planning and obstacle avoidance. The planning and control function generates global and local motion plans based on the map and the UGV‘s current position.

The human-machine interface provides operators with a GUI and handheld controller for semi-autonomous UGV control or full autonomy operation. The HMI also streams camera output, displays real-time vehicle diagnostics, and allows for mission planning.

The autonomous decision-making feature uses AI machine learning algorithms and sensor fusion to dynamically navigate the UGV through unknown terrain and evaluate the best decision while minimizing risks.

The specific sensors used in a UGV depend on the intended function and level of autonomy required. The system can integrate with existing unmanned systems and be used on any vehicle with the appropriate sensors. The software architecture is designed to be compatible with ROS1/ROS2 systems.

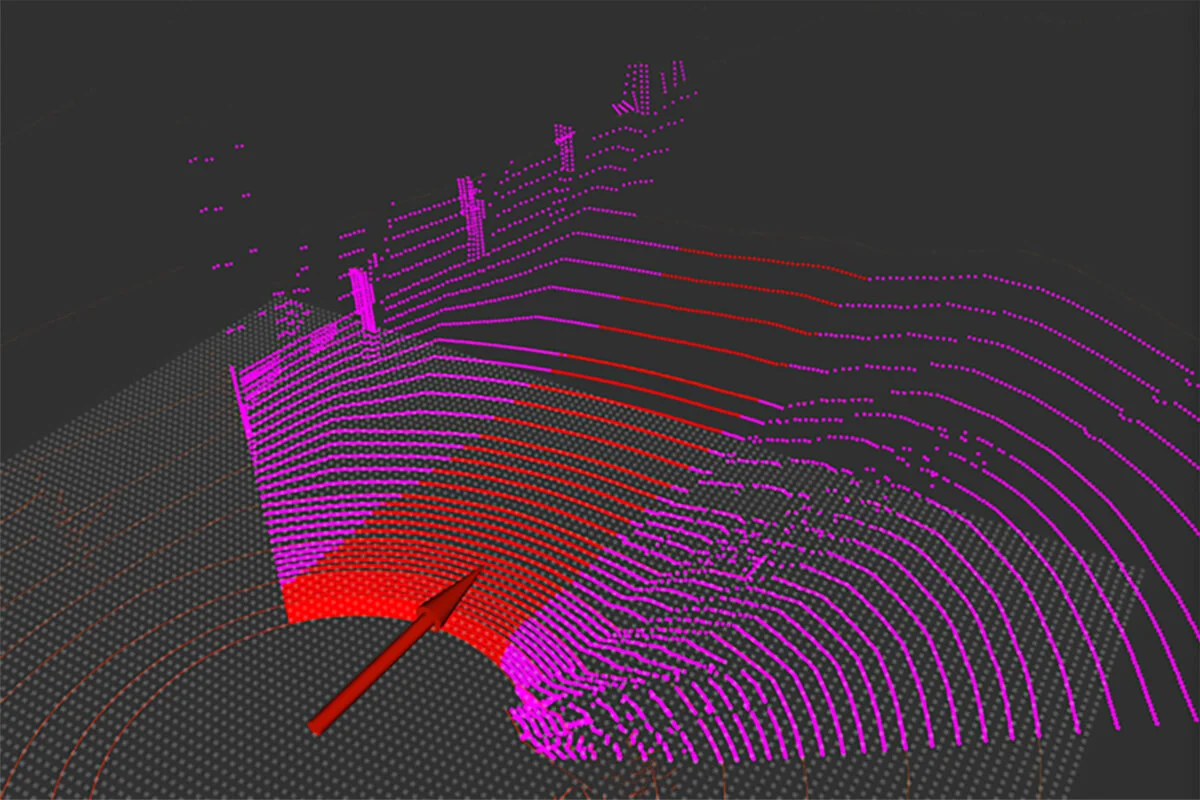

The Autonomous navigation utilizes cameras, LiDAR, Radar and IMU to provide robust vehicle driving support and autonomy. It efficiently transfers information from sensors to the planning and control layer, enhancing a basic control architecture with Segmentation/Lidar slam for seamless operation. By employing way-point-based driving and an Anti-collision feature, the system is making informed decisions but also ensures successful traversal through diverse areas.

The Autonomous navigation implements Simultaneous Localization and Mapping (SLAM) to integrate separate datasets into a unified picture, using them for the training process. Based on terrain analysis, obstacle detection, and planned trajectory, it sets traverse capabilities and incorporates obstacle avoidance for off-road scenarios into the manoeuvre graph topology development environment. The training process outputs are integrated into the deployed models on unmanned vehicles. Maps layer integration into the planning process and trajectory estimation further enhance its effectiveness.

The Autonomous navigation employs segmentation techniques, analyses data objects through internal trained modules for obstacle detection, and establishes trajectory and boundary conditions. In case of impassability, such as obstacles, insufficient map base, missing GPS information, or non-functioning Lidar, the system enables trajectory re-planning for uninterrupted navigation.

The application interface provides operators with a GUI and handheld controller for semi-autonomous control or full autonomy operation. App also streams camera output, displays real-time vehicle diagnostics, and allows for mission planning.

The system can integrate with existing unmanned systems and be used on any vehicle with the equipped sensors. The software architecture is designed to be optimized for running in offline mode.

Detailed information

| Perception | Uses sensors such as cameras, LiDAR, and radar to identify and track obstacles in its path. |

| Navigation | Uses GPS, IMUs, and wheel encoders to determine its location, plan and execute its route to a given destination. |

| Obstacle Avoidance | Detects obstacles using sensors like LIDAR, radar, and adjusts its trajectory or speed to avoid them. |

| Mapping | Creates a 3D map of its surroundings to plan its route and avoid obstacles. |

| Path Planning | Plans an optimal route considering terrain and obstacles, adjusting in real-time based on changes in the environment. |

| Decision-making | Provides intelligent decisions in real-time based on gathered information and assigned tasks. |

| Control | Controls movement using actuators like motors or hydraulics. |

| Communication | Designed to be offline first. Also communicates with a command centre through wireless protocols like MESH network, Wi-Fi or cellular networks. |

Surveillance

UGV system for monitoring specific areas such as borders, military installations, or public spaces can detect and identify potential threats using long-range cameras with extended zoom capabilities, thermal imaging for detecting heat signatures, and acoustic sensors for detecting sound.

Reconnaissance

UGV system equipped with high-resolution cameras, LIDAR sensors for 3D mapping, and infrared sensors for night vision provides real-time environment information.

Demining

UGV system for clearing hazardous areas can use metal detectors, ground-penetrating radar, robotic arms with specialized tools, and remote detonation system to clear minefields or operate in dangerous areas.

Logistics

UGV system for transporting supplies, equipment, or personnel over short distances can operate autonomously or under remote control with a payload capacity of up to 500 kg, with a rugged chassis for off-road use, and GPS navigation.